Since the launch of the most capable AI model from Open AI to date on 13th May 2024, its comparison with Google’s best AI model, Gemini 1.5 Pro, started brewing.

From this GPT4o vs. Gemini article, we aim to understand the latest advancements in natural language processing and multimodal understanding as the tech giants continue their race to push the boundaries of what AI can achieve.

The launch of these two powerful AI models has attracted many questions from users about their abilities, areas of assistance, limitations, new features, and ways to use them now.

Let’s tap into these questions and begin comparing both!

GPT-4o vs Gemini 1.5 Pro – Overview

To begin our comparison, let’s take a closer look at the key features and capabilities of these two AI giants:

| Feature | GPT-4o | Gemini 1.5 Pro |

|---|---|---|

| Modalities Supported | Text, Audio, Vision, Video | Text, Audio, and Video |

| Context Window | Up to 128K tokens | Up to 1M tokens |

| Multilingual Support | Supports 100+ languages | Supports 100+ languages |

| Special Abilities | With vision, it can provide real-time feedback | Analyse and understand large data sets and documents up to 1,500 pages. |

| Availability | Access is limited to text-based model only | Fully accessible AI model |

| Pricing | It can be used by ChatGPT free and Plus users currently | $19.99/month |

| Link | Use here | Use here |

As the table shows, both GPT-4o and Gemini 1.5 Pro share a common foundation in their ability to process and generate content across a wide range of modalities.

However, the models also exhibit some key differences, particularly in terms of their context window size, accessibility, and pricing structure.

What Is GPT-4o?

GPT-4o, OpenAI’s latest model, is a powerful and versatile AI system that can accept and generate content across various modalities, including text, audio, vision, and video.

It builds upon the success of its predecessor, GPT-4 Turbo, and aims to provide a more natural and seamless human-computer interaction experience. GPT-4o New Features

Some of the key new features and capabilities of GPT-4o include:

- Multimodal Capabilities: GPT-4o can understand and generate content in multiple modalities, allowing for more natural and intuitive interactions. This includes processing and responding to audio, vision, and video inputs, in addition to traditional text-based interactions.

- Reduced Latency: GPT-4o can respond to audio inputs in as little as 232 milliseconds, approaching human-like response times. This is a significant improvement over its predecessor, GPT-4 Turbo, which had average latencies of 2.8 and 5.4 seconds for voice-based interactions.

- Improved Performance: GPT-4o matches or exceeds the performance of GPT-4 Turbo on text-based tasks while also demonstrating significant improvements in non-English languages, vision, and audio understanding. This enhanced capability ensures that GPT-4o can provide high-quality outputs across a diverse range of use cases and applications.

- Increased Efficiency: GPT-4o is 2x faster and 50% cheaper than GPT-4 Turbo, making it more accessible and cost-effective for developers and users. This efficiency boost is a crucial factor in driving wider adoption and real-world applications of the model.

Read more about what’s new with the latest Open AI’s AI model here.

What is Gemini 1.5 Pro?

Gemini 1.5 Pro is the latest member of Google’s Gemini family of multimodal models. Like GPT-4o, it can process and generate content across a wide range of modalities, including text, audio, and video.

With the latest update, the Gemini 1.5 Pro is at least 10% better in its evals report as claimed by Google itself, in their Gemini 1.5 Pro performance report.

Gemini 1.5 Pro New Features

Some of the key new features and capabilities of Gemini 1.5 Pro include:

- Expanded Context Window: Gemini 1.5 Pro can handle input contexts of up to 1 million tokens, a significant increase from the 32,768 token limit of its predecessor, Gemini 1.0 Pro. This expanded context window allows the model to leverage a much broader range of information and context when processing and generating content.

- Improved Multimodal Understanding: Gemini 1.5 Pro demonstrates enhanced performance on various multimodal benchmarks, including image, video, and audio understanding. This represents a significant advancement over the capabilities of Gemini 1.0 Pro, positioning the model as a more versatile and capable AI assistant.

- In-Context Learning: Gemini 1.5 Pro can learn new skills, such as translating between languages, directly from the information provided in its input context without requiring additional fine-tuning. This “in-context learning” capability enables the model to adapt and expand its knowledge more efficiently, potentially making it a more versatile and valuable AI tool.

- Efficiency Improvements: Gemini 1.5 Pro is more computationally efficient than Gemini 1.0 Pro, allowing for faster inference and lower costs (refer to the linked report above). This efficiency gain is particularly important in real-world applications, where speed and cost-effectiveness can be critical factors in determining the viability and scalability of AI-powered solutions.

Comparing GPT-4o vs. Gemini 1.5 Pro

Now that we’ve explored the key features and capabilities of both GPT-4o and Gemini 1.5 Pro, let’s dive deeper into a side-by-side comparison across several important dimensions:

1. Accessibility

Coming to who can use these AI models as of May 2024, here are some details on both:

- GPT-4o: Currently, access to GPT-4o is limited to the text format of the model only, with OpenAI releasing its highlighted conversation feature soon.

- Gemini 1.5 Pro: Gemini 1.5 Pro, however, is widely available with its 1M token model and has a waiting list for a much larger 2M token-accepting model.

This limited accessibility poses a challenge for users who are eager to experience the capabilities of these cutting-edge AI models.

Until OpenAI and Google make these models more widely available, the true potential and impact of GPT-4o and Gemini 1.5 Pro will remain largely untapped by the broader user community.

2. Performance

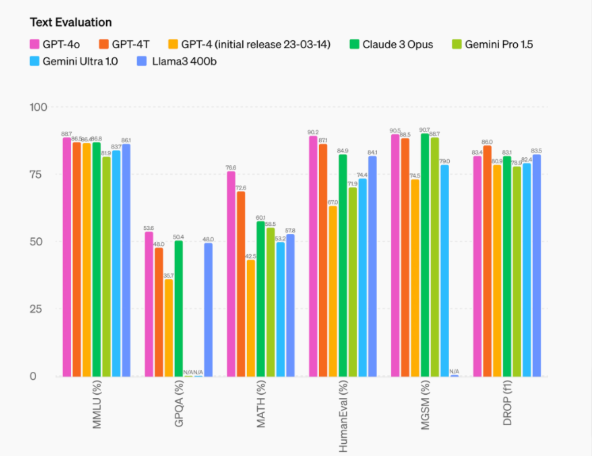

Both AI models have gone through extreme tests and surpassed the benchmarks their predecessors set across multiple abilities.

GPT-4o:

Matches or exceeds the performance of GPT-4 Turbo on text-based tasks while also demonstrating significant improvements in non-English languages, vision, and audio understanding.

OpenAI’s claims of GPT-4o’s superior multimodal capabilities have been backed by impressive results on a range of benchmarks.

Gemini 1.5 Pro:

Outperforms its predecessor, Gemini 1.0 Pro, on a wide range of benchmarks, including text, vision, and audio understanding.

It also sets new state-of-the-art results on several multimodal tasks, showcasing its enhanced capabilities in processing and generating content across different modalities.

While both models have demonstrated impressive performance, the available data suggests that GPT-4o may have a slight edge over Gemini 1.5 Pro in certain areas, particularly when it comes to audio and vision-related tasks.

However, it’s important to note that the performance gap between the two models may narrow or even reverse as the Gemini 1.5 Pro continues to be refined and optimized.

3. Mobile Applications

Both AI models are accessible using mobile phone apps, and both work seamlessly with an application.

GPT-4o:

This new AI model is currently available to use on the same ChatGPT app that you could use to operate GPT 3.5 or GPT 4. However, the conversation feature doesn’t have access to GPT4o yet.

Gemini 1.5 Pro:

Differing from ChatGPT, Gemini has a separate app available on the Google Play store and Apple app store, with fully accessible features.

The lack of mobile-optimized applications for both GPT-4o is a notable limitation for conversations, as the widespread adoption and real-world impact of these AI models will likely depend on its ability to do so.

As the technology continues to evolve, we can expect to see more concerted efforts from OpenAI and Google to bring these powerful AI models to the mobile realm.

4. API Access

Developers monitor API access every time an AI model launches. Let’s find which AI model is best suited for building upon.

GPT-4o:

GPT-4o is available in the OpenAI API as a text and vision model, with plans to add support for audio and video capabilities in the future.

This GPT4o API access allows developers and researchers to leverage the model’s capabilities within their own applications and projects.

Gemini 1.5 Pro:

Gemini 1.5 Pro is available in the Google Cloud Vertex AI platform as a text and vision model, with plans to expand to other modalities.

This integration with Google’s cloud infrastructure provides users with a familiar and well-supported environment for building AI-powered solutions.

The availability of API access is a crucial factor for both GPT-4o and Gemini 1.5 Pro, as it enables a wider range of developers and organizations to integrate these advanced AI models into their products and services.

5. Pricing

How big of a hole will these AI models cut into your pockets? There’s some good news for you.

GPT-4o:

The GPT4o model is free to use for both free and paid subscribers of ChatGPT, but it has different usage limitations.

OpenAI has not publicly announced pricing details for GPT-4o. The company has traditionally taken a more opaque approach to pricing, allowing free access.

Gemini 1.5 Pro:

Pricing for using Gemini 1.5 Pro is $19.99 per month. This transparent pricing structure clearly explains the costs associated with utilizing the model’s capabilities.

There’s no such differential between GPT-4o and Gemini 1.5 Pro’s pricing as of now, but it will be interesting to see how much Open AI charges for this.

While OpenAI’s reluctance to disclose pricing details may raise concerns about potential hidden costs, Google’s upfront and competitive pricing for Gemini 1.5 Pro could give it an advantage in certain use cases and market segments.

Conclusion: GPT-4o vs. Gemini 1.5 Pro Needs More User Experiences

While both GPT-4o and Gemini 1.5 Pro are impressive and represent significant advancements in the field of AI, it is still too early to declare a clear winner. These models were only recently launched, and users have yet to explore their full capabilities and limitations thoroughly.

One of the main challenges is the limited accessibility of these models. Neither GPT-4o nor Gemini 1.5 Pro is currently available for extended free usage to the general public, with access being restricted to select partners and developers.

This makes it difficult for users to gain hands-on experience and provide feedback on the models’ capabilities and performance.

In the meantime, we encourage interested users to stay informed about the latest developments and to provide feedback to the OpenAI and Google teams to help shape the future of these powerful AI models.

Only with a deeper understanding of how these models perform in real-world scenarios can we truly assess which of these AI giants will come out on top in the long run.